Azure

Azure

How to move Azure blobs up the path

This is the short, but for me, pretty intense story from when I uploaded 900 blobs of one Gb each to the wrong path in a storage container. Eventually I was able to move these files using azcopy and PowerShell

Thanos persistent Prometheus metrics

In our Azure Kubernetes environment (AKS) we use Prometheus and Thanos for application metrics. Thanos allow us to use Azure Storage for long term retention and high availability Prometheus setup. The other day I was challenged with deleting a series of metrics causing high cardinality. Meaning that a lot of new series of data was written due to a parameter being inserted during scraping.

The way Thanos works is that it takes raw prometheus data, downsamples it and upload it to Azure Storage for long term retention. Each time this process runs, it will create a new blob. In our production environment we had around 900 blobs and 900gb of data.

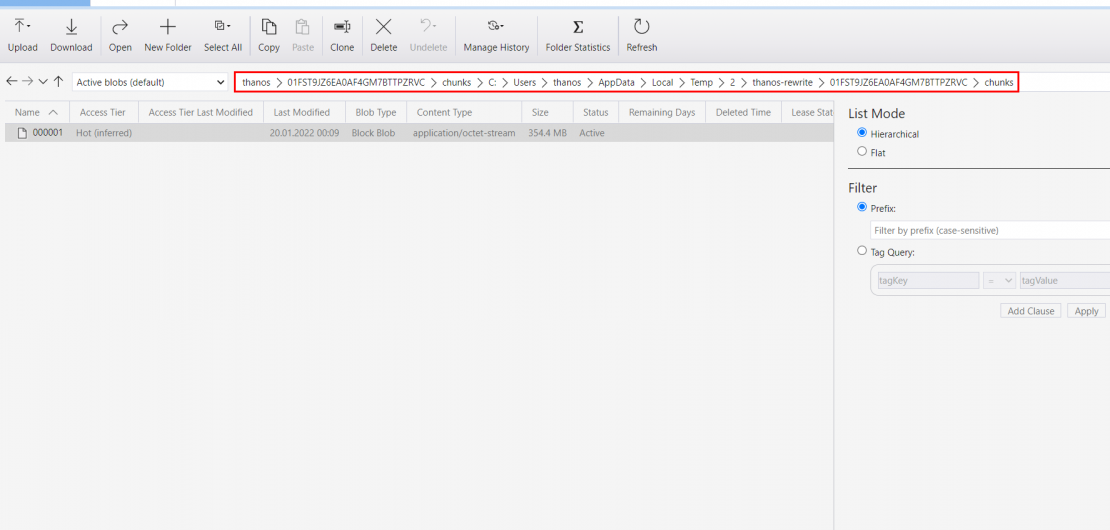

Thanos has a built in tool to rewrite these blobs and remove the metric we wanted, which seemed easy enough to do, but we had no idea when the problem first started, so I had to analyze, rewrite and upload all the data. It all seemed to work fine, util I discovered no metrics where available. It turned out that the tool I used inherited my local path and uploaded all the modified data to <guid>/chunks/c:/users/appdata/local/[...]/00001.blob

So no matter how satisfied I was, all the data was useless as thanos expected the files to be under <guid>/chunks/00001. On the bright side, all data was there, so the challenge was to move the files from <guid>/chunks/c:/users/appdata/local/[...] to <guid>/chunks/. From the two pictures below you can see the folder structure. Going trough a download and upload approach was the last thing I wanted to do.

AzCopy and PowerShell to the rescue

I already knew my way around azcopy. But I did not know the process actually run on the Azure backbone if you copy within or between storage accounts. Luckily my dear Twitter friends was there to help where I failed to read the documentation.

To perform the copy operation I used a combination of Azure Powershell and AzCopy.

- Connect

- Get all current blobs

- Filter them

- Actually copy

- Second loop to delete

Below is my complete script. This could be way smarter but I quickly put it together to get the job done.

Summary

I hope this helps someone else who accidentaly upload a lot of data to the wrong place. If you by any chance are using Thanos. I filed this as a bug.

7 COMMENTS

Ive read several just right stuff here Certainly price bookmarking for revisiting I wonder how a lot effort you place to create this kind of great informative website

The level of my appreciation for your work mirrors your own enthusiasm. Your sketch is visually appealing, and your authored material is impressive. Yet, you appear to be anxious about the possibility of moving in a direction that may cause unease. I agree that you’ll be able to address this matter efficiently.

Real Estate I am truly thankful to the owner of this web site who has shared this fantastic piece of writing at at this place.

Techno rozen Hi there to all, for the reason that I am genuinely keen of reading this website’s post to be updated on a regular basis. It carries pleasant stuff.

Mygreat learning I very delighted to find this internet site on bing, just what I was searching for as well saved to fav

This entrance is unbelievable. The splendid substance displays the distributer’s dedication. I’m overwhelmed and anticipate more such astonishing entries.

I Passed the Azure Exam thanks https://www.pass4surexams.com/

Comments are closed.