Automation

Automation

Using Azure OpenAI ChatGPT for incidents

The Challenge of Long Chat Threads During Incidents

Everyone is an AI expert these days. After OpenAI released ChatGPT it’s almost impossible to work in tech without being presented with good (and bad) examples of what this service can do for you. Of course, I am also interested in seeing what these services can help with, but until very recently I didn’t have any good use cases that would provide the company I work for and me any value. Of course, GitHub copilot help our developers when it comes to programming, and it helps me from time to time with the same. Maybe even more, as I have no idea what I am doing in most cases.

I used Azure OpenAI and the GPT3.5 turbo language model, which is what the public ChatGPT uses. The reason for using Azure OpenAI is to make sure we can use and train the model with our company-specific data. Currently, the service is open on request only.

Anyway, let’s dive into the actual problem at hand. As an incident responder, you know how important it is to keep track of what happened during an incident. When the incident is over, you need to have a clear summary of what happened to help you identify what went wrong and how you can prevent similar incidents in the future. But what if you have hundreds or even thousands of chat messages to go through? That’s where Azure OpenAI and ChatGPT come in.

Using PowerShell and Slack API to Retrieve Chat Threads from Slack

In Vipps Slack is our primary communication tool during incidents. After each incident, we need to go through the chat threads to understand what happened. We used to do this manually, but it was time-consuming and error-prone. That’s why we decided to automate the process using Azure OpenAI and ChatGPT.

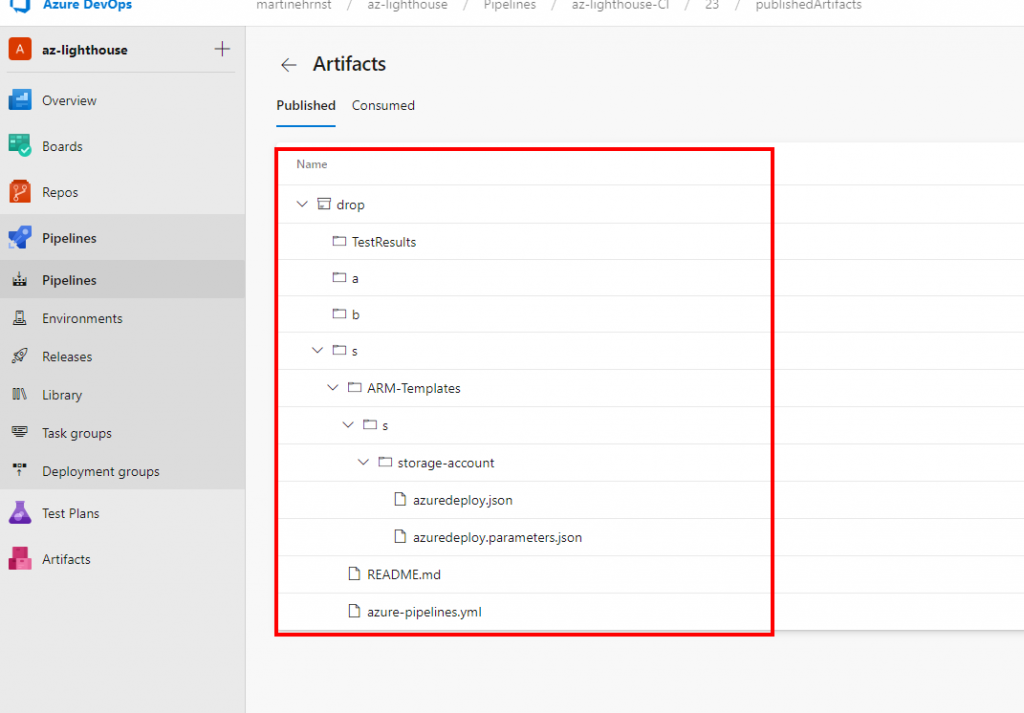

First, I used PowerShell and Slack API to retrieve the individual messages from Slack threads. I then passed the chat threads to Azure OpenAI’s GPT-3 model, which generated a summary of the incident based on the chat messages.

Setting up a Slack App to use Slack API

To use the Slack API to retrieve chat threads, you’ll need to set up a Slack app and obtain an API token. Here’s how you can do it:

- Go to the Slack API website (https://api.slack.com) and sign in with your Slack account.

- Click on the “Create New App” button and give your app a name and a development workspace.

- In the app dashboard, navigate to the “OAuth & Permissions” section and add the “channels:history” scope to your bot token scopes.

- Install the app in your workspace and copy the Bot User OAuth Access Token.

- In PowerShell, use the following code to retrieve the chat threads from Slack:

$token = "YOUR_BOT_TOKEN"

$channelId = "CHANNEL_ID"

$url = "https://slack.com/api/conversations.history?token=$token&channel=$channelId"

$response = Invoke-RestMethod -Uri $url

Putting the Complete PowerShell Script together

Below is a complete PowerShell script. I have redacted some company-specific information. So feel free to use and modify where you need. It is possible that you need to filter out more things than I did. Specific users that you do not want to include, HTML content, etc.

Summary and things to know

When using Azure OpenAI and ChatGPT to generate incident summaries, it’s important to keep in mind that the model is designed to analyze text-based data. If the chat messages contain images or other non-text data, the model will not be able to interpret them.

If your incident chat threads contain images or other non-text data, you may need to consider alternative ways to include that information

Another important consideration when using Azure OpenAI and ChatGPT to summarize chat threads from Slack during incidents is the token limitations of the model. The GPT-3 model has a limit of 2048 tokens per input, which means that if your chat threads are particularly long, you may need to split them into multiple inputs to generate a complete summary.

In addition, the Azure OpenAI API uses a token-based pricing model, which means that you will be charged based on the number of tokens generated by the model. If you’re generating a large number of summaries or working with particularly long chat threads, this can quickly become a significant expense.

By being mindful of these token limitations and experimenting with different summarization strategies, you can still use Azure OpenAI and ChatGPT to generate valuable incident summaries that can help you improve your incident response process.