Azure

Azure

Azure AD authentication in Azure Functions

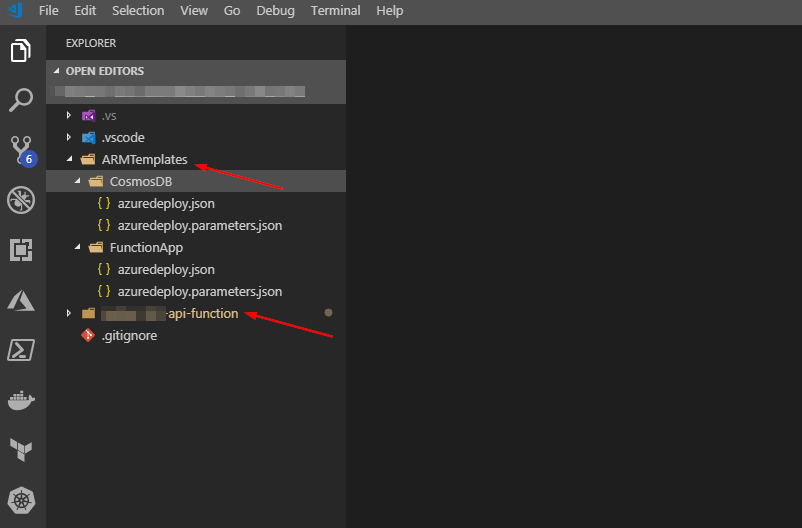

Ever had the need to enable Azure Active Directory authentication in Azure Functions? In a recent project, I wanted to use Azure Functions, and I wanted both system-to-system authentication, as well as user-based. As Azure Functions is a part of the app services in Azure. It shares many of the same features. Authentication is one of them.

Enable authentication

The scope for this blog post is not to show you how to build an Azure function, but to enable Azure AD authentication on it. You can add auth to your existing function or create a new one using your method of choice. For simplicity, I will show the process of using the Azure portal.

To enable authentication in Azure Function. Navigate to “Authentication/authorization”. This will open a series of blades which guides you through the process.

If you’re not familiar with Azure AD and custom application registrations, I recommend that you use the Express option. This will create the needed application in AAD for you.

Change to anonymous authentication

By default Azure Function uses something called “Function authentication” This is where all your requests have a code parameter at the end of the URL.

https://my-function-app.azurewebsites.net/api/function-name?code=xyzx-zyxx...

We want to have Azure AD perform authentication and authorization, and not the function itself.

Within the GUI, it’s just a flick of a switch. If you are developing locally, using C# you typically do this:

public static HttpResponseMessage (run

[HttpTrigger(

AuthorizationLevel.Anonymous)]

HttpRequestmessage request)

{

logic

}

Enable user assignment

After changing the authorization level and enable AAD authentication,

all users in your organization will automatically have access. If you want more granular control over who has access to your application, you should enable user assignment.

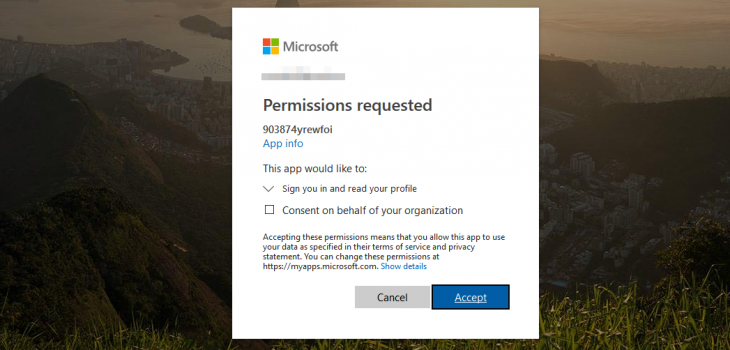

To enable user assignment. Navigate to enterprise application under AAD, and look up the app created by the wizard. The enterprise app is the service principal representing the application you created. Your Azure Function.

Under properties, find the swith for user assignment and turn it on. Navigate to your function URL and see if it works, meaning access denied.

Later add your own user and verify authentication works through Azure AD.

If you want other applications (clients) to call your function, you will have to assign them API access. The same way you give access to for example Microsoft Graph API, you will find your custom application as well.

This will not work right away – By default, there are no application roles assigned. Only delegated permissions. For client authentication to work, you will need to add custom roles to the app representing your Azure Function. It is not difficult, but I used too much time finding it out. Microsoft has it documented here

Authenticate with code

Chances are that your azure function is not a graphical website. Therefore I assume you want to authenticate using code. Either with your own user, or with a separate application/secret combination (app credentials).

The great thing about this is that it works just as any other Microsoft/Azure APIs. If you know how to get a token from Microsoft, you can use the same techniques against your function. My example below show how to retrieve a token for our azure function, and use that bearer token against the function. I use a client application in this scenario.

Summary

This feature is great. I consider my self as a modern IT operations guy. And operations role these days requires more coding and scripting. It is super easy to expose things on the internet. But remember, it might also be just as easy to secure.

I have no idea on how to implement a authentication layer. And if i can use one of the best, i’m all aboard.