Automation

Automation

Using Azure pipelines to deploy ARM templates

There are many reasons that you would use an Azure Resource Manager (ARM) template to deploy your Azure resources. And there’s equally many ways to deploy this kind of template.

For simple one time deployments of Azure resources I tend to use PowerShell or AZ CLI, but lately I have ben busy trying to create a web api based on Azure Functions using C# and .NET core. For those who know me, that’s not straight foreward.

To make things even more diffucult, I wanted to use Azure DevOps and Pipelines to build and push my code. In order to do that, I had to have some infrastructure in place, so why wouldn’t I use Azure Pipelines to deploy the ARM template as well?

Create ARM template

In order to deploy Azure Resource Manager templates, you will need to create one. ARM templates are based on Json and follows a schema. This schema or rule set if you like, defines how you must structure your template.

There are two forms of deployment options. At a resource group level (most common) and subscription level deployment. Both uses the same kind of Json language but have two different schemas. You’ll find the current resource group deployment schema here (no need to read :))

If you are unfamiliar with Json and ARM templates I recommend you to read Microsofts official documentation and getting started guide which will show how you can find the template from an existing deployment or create a new.

For reference, this is a simple template to deploy a web app, that I copied from Microsofts GitHub repo with a bunch of templates to get you started.

Adding ARM template along with your code

As I already mentioned deploy ARM template in a pipeline makes sense in many ways, especially if you have developed an application. This way you can control the infrastructure along side the code.

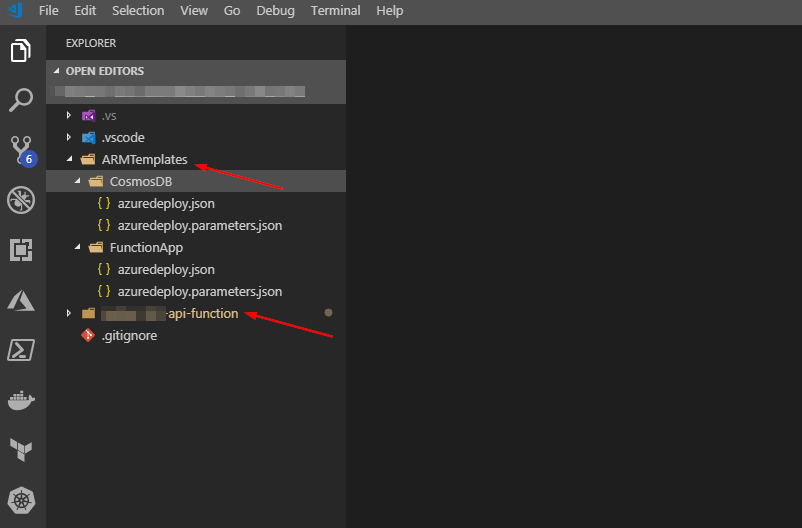

My Azure Function uses Cosmos DB and Key Vault as parts of it’s infrastructure. It is all developed in Visual Studio Code, and the whole thing is cutting edge for an old operations guy like me.

In the picture below you’ll see that I have structured my project in to two subfolders. One is holding the ARM templates, and the other one is for the acutal functions code. I did this so i would be able to chose artifacts from the build process in Azure DevOps pipelines. There might be a better way, but when i had everything in the same folder the templates was packed with the code and not available in deployment pipeline.

Set up the build pipeline

Not long ago I learned that there are two types of pipelines. Build pipelines and release pipelines. Build is used to pack up or actually build the application (same as pressing f5 in Visual Studio). Where release are used for pushing the application on to your infrastructure (and deploy the infra).

For reference. In Azure DevOps, my folder structure looks exactly the same

Next move over to pipelines and create a new release pipeline. From here chose your repository (I use Azure repos).

After chosing your repo, ou can chose to start from a template. My project is a .NET core application and I had no idea what was needed to build that, so a template worked nicely. Here you can chose what’s best for you’re project. The important pieces from an ARM template perspective comes in the next steps

Start out by filling in the obvious. A name and a server to do the build. In my case i run with Azure hosted 2017. In an on premises environment I would use private servers.

Add your templates as build artifacts

What you will need to do next is to take those Json files in your arm template catalog, and make them available as part of the build. You will have to add in a “Copy files” step and fill in a few properties. Pay attention to where I chose the source folder and the destination folder which have a variable reference, $(build.artifactstagingdirectory)/arm

Now. Hit save and queue. This will save your new (or edited pipeline) and start a build of your project.

By now you should also have a build running, and if you’re like me, exploring new stuff, you get used to this

In this particular error. The publish task for .NET core is missing www root folder. By trial and error I found that I had to make a few changes to my build pipeline. This is not a prerequisite for running ARM templates with Azure pipelines, but i figured I should add in everything I had to do for this project, both for my reference and for yours if needed.

I removed “publish web projects” as i don’t have an ordinary web project, but an Azure function based API.

When you remove this tick, the task will refere to your project file (.CSPROJ).

Here i should give a shout out to my trusty colleague Emil Kjelsrud for helping out, once again.

Hit save and queue once again. Voila!

Configure the release pipeline with ARM templates

When your build is successful, we’re close to launch our application on to Azure. We do that by configuring a release pipeline. It is possible to combine the two, but I like them separated.

Under Pipelines > Releases hit new. A new wizard will pop up. Either chose one that fit your purpose, or start from scratch. In my case I chose to start with app service deployment. One of the first things you notice is “some settings need attention.”

Required settings in this context are the connection to your Azure environment and subscription. You will need to configure this in order to continue. There are several methods available for connecting your pipelines to Azure. I have my pipeline deploying resources in a tenant and subscription that my user does not have access to. That configuration requires a SPN/App registration in Azure AD with the correct permissions.

After configuring your connection. Modify your pipeline by adding in your required Azure Resource Group Deployment tasks. Here comes a few important bits. Prior to setting up my pipeline, I have a resource group created already. If you want to have the resource group created during deployment. You can use the Azure CLI or Azure powershell tasks (or a template) to do so.

Continue to fill in the required parameters. Remember that I separated my application code and the templates in to separate folders? This is why. I can now chose from the same artifact what i want to deploy, as the build pipeline have them available in their respective folders. Chose the template and your parameters file.

In this Azure DevOps environment we have an extension available that parses all outputs from your ARM templates. This way I can use variables from the previous step in my app service deployment. If you dont have this extension available, you can achieve the same result using PowerShell

Create the release

You’re pipeline is now complete. Save and create a release, the pipeline should now pick up the latest build and deploy your code to Azure. You can follow the whole process in Azure, under resource group > deployment and in Azure DevOps

After a few minutes (or seconds) depending on your configuration, you should have both your infrastructure and the actual application available.

Final thoughts

While I understand many of the concepts around infrastructure as code, continious integration and so on, I don’t always use the correct terms, so thank you for reading.

I hope you also have a greater understanding around the concepts, and also how you can utilize the possibilities available. My thoughts working with projects like this is always bound to the operational side. I know I’m going to use more ARM templates in Azure Pipelines, but probably not along side the actual code, as that’s not my day-to-day work. Again, I see my self working more and more with these Dev tools, and thats why I like to say that this is how we do modern Ops.

I leared a few more tips and tricks during this small project, so expect a few more posts soon.